The shift toward three-dimensional integrated circuits (3D-IC) marks a transformative design leap in semiconductor technology, driven by the demand for higher performance, smaller form factors, and lower power consumption. However, this innovation introduces significant challenges in maintaining structural integrity, which is critical for device reliability. Semiconductor engineers must understand the engineering challenges affecting chip package structural integrity in the context of 3D-ICs, and their solutions.

The Driving Factors in 3D-IC Adoption

AMD MI300X

The adoption of 3D-ICs is driven by key business factors, such as the growing demand for miniaturization, market trends in consumer electronics, and the Internet of Things (IoT), which favor compact devices with enhanced functionality. Performance needs are also a significant driver, with 3D-ICs offering reduced signal latency and increased bandwidth compared to traditional printed circuit boards (PCBs). With these modern-day must-haves, energy efficiency is critical, as die stacking minimizes interconnect lengths and reduces power consumption — a key requirement for mobile and edge devices. Finally, advanced applications in AI, 5G, and the automotive industry require high computational capabilities, making 3D-ICs essential for meeting these evolving demands.

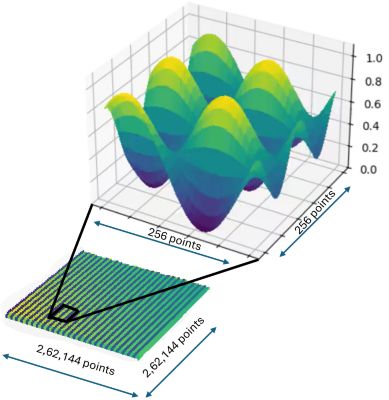

A 2.5D-IC layout including 3D high-bandwidth memory (HBM)

Solving Engineering Challenges in IC Development

While electronics reliability and signal and power integrity (SI/PI) have been cornerstones of IC design with well-established techniques, the advent of 2.5D/3D-ICs introduces unprecedented thermal and structural challenges. Mechanical and thermomechanical stresses during manufacturing and operation pose significant risks to structural reliability, which traditional design methods fail to meet in terms of increased model complexities affecting design cycles and yields.

The vertical stacking of chips generates high stress, increasing the risk of warpage or cracking. Additionally, dense stacking exacerbates heat buildup, which requires advanced thermal management solutions. Any minor warpage can cause assembly defects, leading to low yields. Therefore, the 3D-IC structure must be flexible enough to minimize stress while ensuring that any warpage remains within the prescribed limit.

Interconnect reliability also emerges as a critical factor, with through-silicon vias (TSVs) and solder microbumps needing to withstand stress, and fatigue due to thermal cycling. Reduced dimensions of microbumps and copper pillar bumps increase susceptibility to cracking and fatigue failure. Additionally, the complexity of multiphysics simulations involving thermal, mechanical, and electrical coupling presents computational challenges that demand robust tools and methodologies.

2.5D model mesh in Ansys Mechanical software

Discover Simulation Solutions

The semiconductor industry requires workflows and solutions that bridge the gap between semiconductor design engineers and physics analysts. Effective collaboration between these roles is essential but complicated by issues such as encrypted file handling, multi-tool environments, intricate feature modeling, and high-fidelity solutions.

To address the collaborative and technical challenges in 3D-IC structural integrity, solutions like Ansys Redhawk-SC Electrothermal software provide advanced capabilities to simulate and analyze the thermal and mechanical behavior of designs. This tool is primarily used by semiconductor engineers for quick power integrity and thermal and stress signoff, enabling them to evaluate heat transfer, temperature distribution, and thermomechanical stresses efficiently. This tool enables users to model the geometry and material properties of 3D-IC components, including the silicon interposer, multiple dies, and interconnects. Additionally, RedHawk-SC Electrothermal software equips semiconductor design engineers — who may not be analysts — with an intuitive workflow to rapidly assess stresses and warpage, ensuring designs meet performance and reliability specifications for quick signoffs from a single tool.

For detailed structural and thermal design, semiconductor packaging teams rely on industry-leading physics tools like Ansys Mechanical software, the Ansys LS-DYNA solution, and the Ansys Icepak application. Mechanical software enables in-depth heat transfer and stress analysis, helping engineers optimize material selection and structural design to minimize warpage and enhance reliability. Design optimization strategies — like reducing TSV density in high-stress areas and using high-fidelity best-in-class structural solvers like Ansys Mechanical APDL (MAPDL) and LS-DYNA software — further mitigate structural risks and enhance overall reliability by doing multilevel sub-modeling.

The Icepak CFD-based thermal analysis tool helps package design engineers model heat dissipation and cooling strategies, ensuring thermal integrity across complex 3D-IC architectures. It also helps capture accurate heat transfer boundary conditions of the 3D-IC that can be used by Redhawk-SC Electrothermal software for a detailed thermal stress analysis. A simulation-driven design approach — leveraging tools such as Redhawk-SC Electrothermal software, Mechanical software, and Icepak software — facilitates the early prediction and resolution of reliability issues, ensuring robust semiconductor packaging designs.

Different customers have unique simulation workflows, and Ansys excels at addressing these needs with its open and interoperable tools. This enables semiconductor designers and analysts to collaborate effectively, accelerating design cycles while delivering high-fidelity solutions for electrical, thermal, and structural signoffs.

AI-driven thermal analysis is revolutionizing semiconductor design by enabling faster and more precise hotspot detection. AI-powered electrothermal modeling predicts hotspots in advance, enabling adaptive meshing to refine the resolution only where necessary. This approach significantly speeds up analysis while ensuring high accuracy.

Typical workflow for evaluating the impact of thermal and warpage on SI/PI in 3D-ICs

Recent examples of structural integrity solutions in 3D-ICs demonstrate the impact of advanced simulation tools. Toshiba leveraged simulation technologies to enhance the reliability of automotive semiconductors by identifying and addressing critical failure mechanisms early in development. Similarly, a leading semiconductor company utilized Icepak simulations to optimize heat dissipation in a stacked-die configuration, achieving improved performance while maintaining reliability. TSMC leveraged Mechanical structural finite element analysis (FEA) software to simulate mechanical stresses induced by thermal gradients in 3D-ICs. This solution has been demonstrated to run efficiently on Microsoft Azure, ensuring rapid turnaround times for today's large and complex 2.5D/3D-IC systems. It effectively addresses the unique multiphysics requirements, enhancing the functional reliability of advanced designs built with TSMC’s 3DFabric — a comprehensive suite of 3D silicon stacking and advanced packaging technologies.

3D-ICs: Innovation, Collaboration, and Reliability

As 3D-IC technology continues to advance, the focus will remain on key areas such as predictive analytics, in which AI-driven simulations are used to identify potential failures before fabrication to enhance design reliability. Collaborative ecosystems will also play a vital role, fostering closer partnerships between EDA tool providers, material scientists, and design engineers to drive innovation. Additionally, ensuring seamless data transfer across tools like Redhawk-SC Electrothermal software, the Icepak application, and Mechanical software will be crucial in streamlining multiscale workflows. Ultimately, maintaining the structural integrity of 3D-ICs is essential for their successful integration into modern electronics. By leveraging advanced engineering solutions and simulation tools, including Redhawk-SC Electrothermal software, the Ansys Mechanical solution, the LS-DYNA application and Icepak software, the industry can unlock the full potential of 3D-ICs to meet the stringent demands of reliability and performance.

Learn more about how Redhawk-SC Electrothermal software can help with your 3D-IC needs.